Survey results: AI large language models and MS care

I am looking for examples of when your HCP gave you advice contrary to what an LLM told you and how you resolved the conflict.

You will be aware that I have an interest in AI and, in particular, large language models (LLMs) and how they are going to disrupt the practice of medicine, including the management of MS. I have been testing several different LLMs over the last 12 months and have tried to nudge you to try them as a tool for self-managing your MS.

We tried to get funding to create an MS-GPT (multiple sclerosis generative pre-trained transformer) but were unsuccessful. The biggest challenge of implementing these in clinical practice is that they will be classified as medical devices and must, therefore, be CE marked as such (quality approved). This won’t happen soon, and I suspect the medical profession will push back on incorporating LLMs into clinical practice as they threaten the profession; turkeys don’t vote for Christmas.

Saying this, a week does not go past without me getting a query from someone with MS to help interpret the outputs of an LLM query. In parallel, much research is going on to show how LLMs outperform clinicians diagnostically, given the same information. To test the waters, I did a short survey a few weeks back, and these are the results.

Please see my newsletter, ‘Turkeys don’t vote for Christmas’ (02-Jan-2025).

The survey

Survey response dates: 02/01/2025 to 10/01/2025

Number of responders: 64

Average age: 54 years (range 32-73)

Sex: females 66% / males 34%

Mean EDSS: 5.0 (range 0.0 - 8.0)

Type of MS

Main survey results

The general understanding of LLMs in the population of people with MS is variable. There is a lot of work to be done to improve this understanding. Would an online tutorial to explain LLMs and how to use them help?

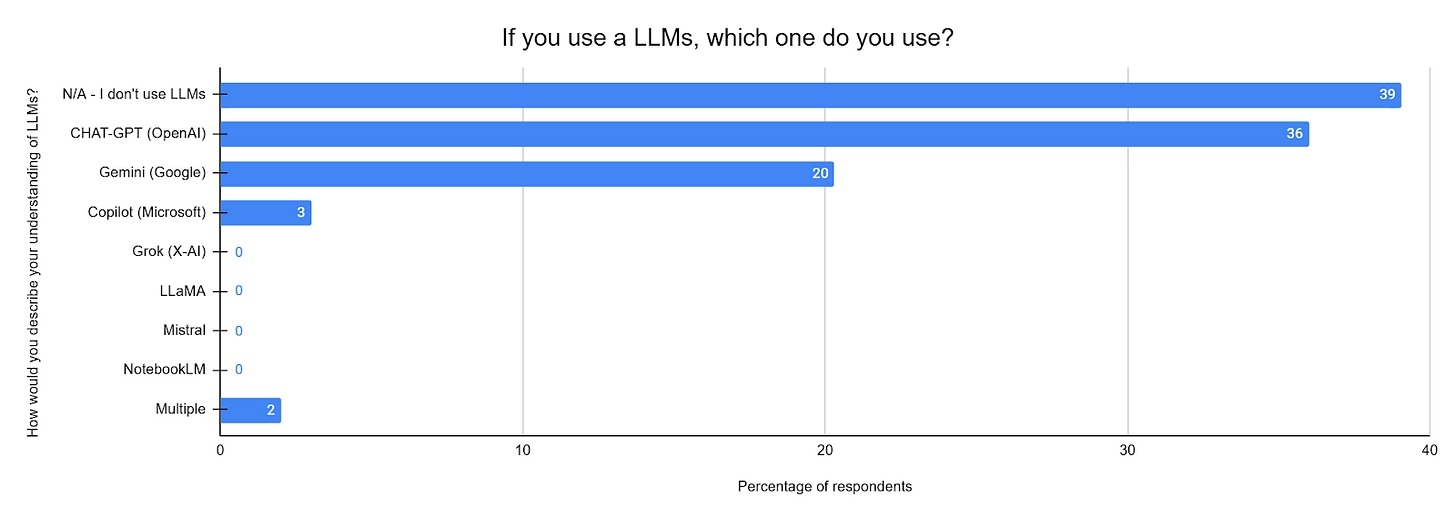

The majority of survey respondents don’t use LLMs or infrequently. This will likely change in the next 12-24 months, given their power to provide high-quality answers to medical questions. Do you agree?

CHAT-GP and Gemini are dominant. I use them both, but I now favour Gemini as it is better integrated into my workflow. I am a massive user of Notebook LM, part of the Gemini suite of applications.

Respondents remain sceptical of LLMs and have low trust in them. I suspect this will change as pwMS get used to using LLMs. Am I wrong? LLMs are very useful to help experts automate tasks and improve productivity.

PwMS seem to be reluctant to discuss LLM queries with their HCPs. I wonder why?

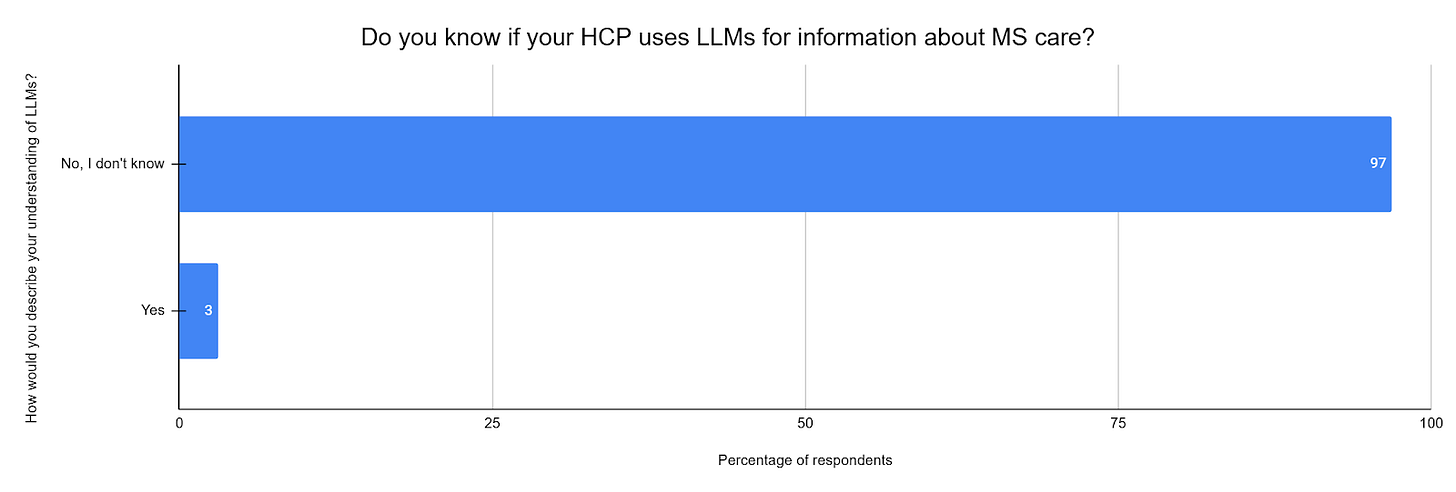

Those few respondents who did ask the HCP about the output from an LLM got pushback. In future, it will be interesting to explore why this happened. Was the HCP sceptical? Did they not have time to respond to the query? Did they not think it was part of the consultation? I suspect the adoption rate of LLMs by HCPs will be slow, particularly when used in this way.

This is not surprising. Most of my colleagues would not admit to using a LLM to aid in their clinical practice. It's eye-opening how reliable the results are. Gemini also gives you references so you can check the results. In one or two years, LLM-enabled medical assistants will be routine. This is my prediction.

There is a trend for pwMS to want their HCPs to use LLMs. I suspect they think this will improve their clinical performance, which is good news.

Most of the points raised in this survey will change rapidly, so I plan to revisit this issue. I am looking for examples of when your HCP gave you advice contrary to what an LLM told you and how you resolved the conflict.

Subscriptions and donations

MS-Selfie newsletters and access to the MS-Selfie microsite are free. In comparison, weekly off-topic Q&A sessions are restricted to paying subscribers. Subscriptions are being used to run and maintain the MS Selfie microsite, as I don’t have time to do it myself. You must be a paying subscriber if people want to ask questions unrelated to the Newsletters or Podcasts. If you can’t afford to become a paying subscriber, please email a request for a complimentary subscription (ms-selfie@giovannoni.net).

Important Links

🖋 Medium

General Disclaimer

Please note that the opinions expressed here are those of Professor Giovannoni and do not necessarily reflect the positions of Queen Mary University of London or Barts Health NHS Trust. The advice is intended as general and should not be interpreted as personal clinical advice. If you have problems, please tell your healthcare professional, who will be able to help you.

As I've been diagnosed with MS for 31 years, I've seen many ideas on treatments come and go. I've seen theories on the cause of MS do the same thing. Any AI tool based on iterations of whatever is in fashion at the moment might look very strange a few years later. I would never trust those answers and would prefer that high end research articles were more accessible instead of being hidden behind paywalls. You make an effort to stand at the top of cliff and yell out what you think is right. Most don't even try. It would terrify me to think any provider got his information from machine learning. It's the modern equivalent of the self-help paperback. I would rather trawl through a variety of well researched but contradictory opinions than be stuck with answer based on plagiarizing the most common opinion.

Interestingly only a couple of days ago I pinged my son, who’s a solicitor, a link to an article on the BBC news website.

It describes the conclusions of research undertaken by a leading uk law firm. Chatbots were put to the test using 50 questions about English law. This was a repeat of earlier research and although they were much improved: ‘they made mistakes, left out important information and invented citations.’

Conclusion: ‘the tools are starting to perform at a level where they could assist in legal research, but there were dangers in using them if lawyers don’t already have a good idea of the answer’

This has definitely reinforced for me the notion that AI is useful but, dare I say, with a degree of hesitation, meaning information being provided needs to be double-checked for accuracy and reliability.